Earlier this year, we released our wellbeing report, Wellbeing Insights from Social Media Professionals, to shed light on the real impact of social media moderation on those doing the work every day. The results confirmed what many of us already suspected: burnout is widespread, boundaries are blurred, and too many people are feeling unseen and unsupported. But it doesn’t have to be this way. Leaders have the power to shape a more sustainable, compassionate, and effective working environment. Here are a few reflections to help you start, or continue that journey.

Are your ways of working truly flexible?

Our report found that 91% of respondents worked beyond their contracted hours, with many doing so daily. For many, it’s not a choice, it’s the only way they can stay on top of things. But that level of overwork isn’t sustainable. Social media isn’t a 9–5 job, and we shouldn’t treat it like one. Leaders should ask: is the structure we’re using fit for purpose? Could we rethink hours, responsibilities, or job design to better reflect the realities of this role and the needs of the people doing it? Flexibility shouldn’t mean working all the time. It should mean building a setup that works, for the charity, and the people behind the screen.

Do people understand what your moderation team is actually dealing with?

A consistent theme in our research: people just want their work to be acknowledged. Moderation often happens quietly, behind the scenes. But it’s not invisible to the person doing it, especially when that work involves exposure to harmful content or difficult conversations. Could you create space to share this work with others in your organisation? Whether it’s through internal meetings, team days, or informal check-ins, more visibility leads to more empathy and that goes a long way.

Are you connecting the dots across departments?

Your social media team has front-row insight into how your audiences feel. They’re hearing the unfiltered feedback. Are you using that insight effectively? Strong communication between departments doesn’t just improve team culture – it also improves results. Make sure your moderation team is looped in early when planning content and campaigns. Preparation and reflection should be standard, not an afterthought.

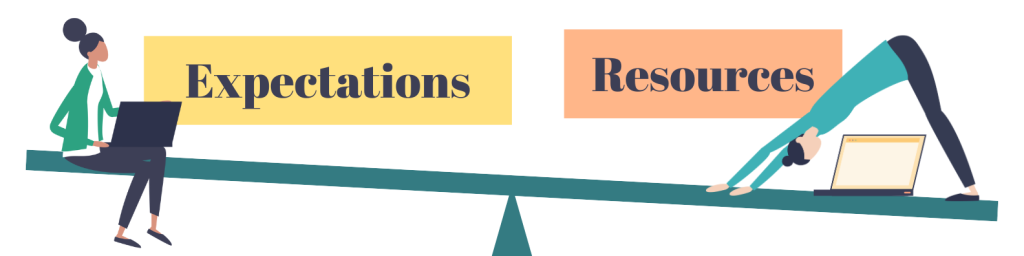

Are your expectations aligned with your resources?

Social media may look effortless, but it’s far from it. Behind every post or comment is planning, nuance, and emotional labour. Before asking your team to do more, pause and ask: do I really understand how long this takes, or what they’re navigating? It’s not about lowering the bar, it’s about leading with empathy and insight.

Have your team been set up to succeed?

Few roles evolve as rapidly as social media. Yet, many teams are expected to just “keep up” without proper training. Add in the emotional toll of moderating online spaces, and you’ve got a serious wellbeing challenge. Training is more than skill development, it’s a protective factor. If your team is facing challenging or harmful content regularly (and they probably are), they deserve tools to manage that safely and confidently.

Is your wellbeing support more than just lip service?

Every single person in our survey reported exposure to harmful or hateful content. If that was happening face- to-face, how would your organisation respond? Would there be protocols in place? Support offered? Now compare that to the support your team currently receives for online harms. If there’s a gap, it’s time to close it. Dismissiveness like “it’s just part of the job” can be incredibly damaging. Take the time to really listen to your team. Check in regularly. Build space for people to talk about what they’re experiencing and ensure there are real, tangible pathways to support.

If you haven’t yet read our report, please consider taking 30 minutes to reflect on it. Leadership isn’t about having all the answers, it’s about being willing to ask the right questions, and making space for change. You can access the report here.

And if you want to talk more about what supportive, safe, and smart moderation looks like, we’re here to help.